DevOps interview questions and answers to help you prepare for your next DevOps interview. Get to know more, check out our blog post!

More...

We've put together the DevOps interview questions and answers that we thought were the most important. These questions can all come up in a DevOps job interview, so we recommend that whether you're sitting on the employer's side or attending the interview as a candidate, you read through and brush up on your knowledge.

We've written examples for many of the questions, but please don't use them word by word, rather say what you have actual personal experience with. The examples are provided to give you an insight into an interview where DevOps interview questions and answers are discussed by experienced professionals.

Good luck with the DevOps interview!

Continuous Integration and Continuous Delivery (CI/CD)

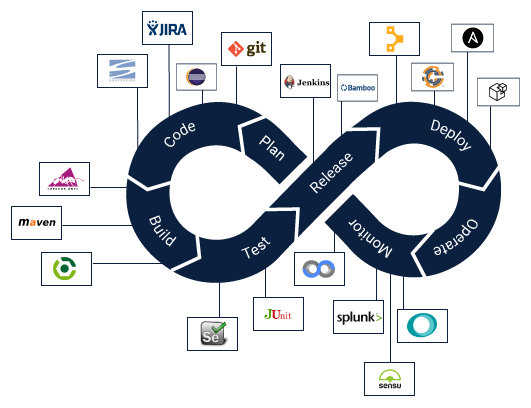

CI/CD, is an acronym, that means Continuous Integration and Continuous Delivery/Deployment. It is a software development practice that emphasizes frequent integration of code changes, automated testing, and rapid deployment to production environments. In a DevOps context, CI/CD enables teams to streamline the development and release process, ensuring that code changes are regularly integrated, tested, and delivered to end-users efficiently and reliably.

This approach helps improve software quality, accelerate time-to-market, and promote collaboration among development, operations, and quality assurance teams. DevOps interview questions and answers often include topics related to CI/CD to assess a candidate's understanding of this critical practice in modern software development.

Q1. How would you explain the concept of CI/CD to a non-technical stakeholder?

A1. CI/CD, which stands for Continuous Integration and Continuous Delivery, is a software development approach that focuses on automating the building, testing, and deployment of applications.

It allows developers to frequently and reliably release software updates to production environments.

In simpler terms, CI/CD ensures that changes to the software are thoroughly tested and delivered quickly, making the development process more efficient and reducing the risk of errors.

Q2. Can you describe the benefits of implementing a CI/CD pipeline?

A2. Implementing a CI/CD pipeline offers several benefits, such as increased development speed, higher quality software, and improved collaboration between development and operations teams.

It enables developers to integrate code changes frequently, catch bugs earlier in the development cycle, and automate the deployment process. This leads to faster feedback loops, faster time to market, and more reliable software releases.

Q3. What tools or technologies have you used to implement CI/CD pipelines?

A3 example answer:

"I have experience with various CI/CD tools and technologies, including popular ones like Jenkins, Travis CI, GitLab CI/CD, and CircleCI. These tools provide capabilities for automating the building, testing, and deployment of applications. Additionally, I have worked with containerization technologies like Docker and orchestration tools like Kubernetes to support the deployment and scalability aspects of CI/CD pipelines."

Q4. Have you encountered any challenges while setting up or maintaining a CI/CD pipeline? How did you overcome them?

A4 example answer:

"Yes, setting up and maintaining CI/CD pipelines can pose challenges. One common challenge is ensuring the proper configuration and synchronization of multiple environments, such as development, testing, and production.

To overcome this, I have implemented infrastructure as code practices, using tools like Terraform or CloudFormation, which allow for consistent and reproducible environments. I have also invested in comprehensive testing strategies, including unit tests, integration tests, and automated acceptance tests, to catch issues early and maintain stability in the pipeline."

Q5. How do you ensure the security and stability of a CI/CD pipeline?

A5. Security and stability are crucial aspects of a CI/CD pipeline.

To ensure security, I implement security best practices such as secure credential management, encrypted communication channels, and role-based access control. I also regularly update and patch the CI/CD tools and infrastructure components to mitigate potential vulnerabilities.

For stability, I follow robust testing practices, including automated tests at various stages of the pipeline. I also leverage monitoring and alerting systems to detect and address any performance or availability issues promptly.

Source: https://medium.com/cuelogic-technologies

Configuration Management

Configuration management in DevOps refers to the practice of managing and maintaining the configurations of software systems and infrastructure in a consistent and automated manner. It involves keeping track of configurations, ensuring they are properly documented, version-controlled, and deployed consistently across different environments.

Configuration management helps ensure reproducibility, reduces errors and enables efficient provisioning and deployment of resources. It is a crucial topic often discussed in DevOps interview questions and answers.

Let's get into it!

Q6. What is configuration management, and why is it important in a DevOps environment?

A6. Configuration management is the process of managing and maintaining the consistency and state of software and infrastructure configurations throughout their lifecycle.

It involves tracking and controlling changes to configuration items, ensuring proper versioning, and enforcing consistency across different environments. In a DevOps environment, configuration management is essential for enabling scalability, reproducibility, and efficient management of infrastructure and software components.

Q7. Which configuration management tools have you worked with, and what are their key features?

A7 example answer:

"I have worked with popular configuration management tools such as Ansible, Puppet, and Chef. Ansible offers a simple, agentless approach with declarative configurations, while Puppet and Chef provide more extensive capabilities and support agent-based configurations. These tools enable infrastructure automation, configuration drift detection, and centralized management of configurations, making it easier to manage complex environments and enforce consistency."

Q8. How do you handle configuration drift and ensure consistency across different environments?

A8. Configuration drift occurs when the actual state of a system or infrastructure deviates from its intended state. To handle configuration drift, I utilize configuration management tools to continuously monitor and enforce desired configurations.

Regular audits and automated checks help identify any deviations, and the configuration management system is responsible for remediation. By keeping configurations under strict control, we can ensure consistency and reduce the risk of unexpected issues in different environments.

Q9. Can you explain the difference between push-based and pull-based configuration management approaches?

A9. Push-based configuration management involves pushing configuration changes from a central server to the target systems. This approach relies on agents installed on the target systems, which actively listen for configuration updates. In contrast, pull-based configuration management involves target systems periodically pulling or fetching configurations from a central server.

This approach typically relies on agents running on the target systems that fetch configurations at regular intervals. Both approaches have their merits, and the choice depends on factors such as scalability, security requirements, and network architecture.

Q10. Have you used infrastructure-as-code (IaC) tools for configuration management? If so, which ones and what were the benefits?

A10 example answer:

"Yes, I have used infrastructure-as-code (IaC) tools like Terraform and CloudFormation. These tools allow me to define infrastructure configurations as code, enabling version control, reproducibility, and scalability. IaC facilitates automation, simplifies resource provisioning, and helps in ensuring consistency across environments."

Infrastructure as Code (IaC)

Infrastructure as Code (IaC) in DevOps is an approach that involves managing and provisioning infrastructure resources through machine-readable configuration files, scripts, or templates. It treats infrastructure configurations, such as servers, networks, and storage, as code, enabling version control, repeatability, and automation.

With IaC, infrastructure can be provisioned, updated, and decommissioned programmatically, using tools like Terraform or AWS CloudFormation. This approach ensures consistency, reduces manual effort, facilitates collaboration, and allows for infrastructure changes to be tested, reviewed, and deployed through the same CI/CD pipelines used for application code. Implementing IaC is a crucial topic in our DevOps interview questions and answers blog post.

Q11. What are the advantages of using infrastructure as code in a DevOps workflow?

A11. Using infrastructure as code brings several advantages, such as version control, repeatability, and scalability. It enables teams to manage infrastructure configurations as code, reducing manual effort, ensuring consistency, and allowing for rapid and reliable provisioning of resources.

Infrastructure changes become auditable and can be tested, reviewed, and deployed through the same CI/CD pipelines used for application code.

Q12. Which IaC tools or frameworks have you utilized, and why did you choose them?

A12 example:

"I have experience with tools like Terraform and AWS CloudFormation. Terraform is a versatile tool that supports multi-cloud environments and offers a wide range of providers. AWS CloudFormation is specific to AWS but integrates well with other AWS services. I choose these tools based on their community support, documentation, ease of use, and compatibility with the target infrastructure."

Q13. Can you explain the concept of idempotency in the context of infrastructure as code?

A13. In the context of infrastructure as code, idempotency means that applying the same configuration repeatedly produces the same result without unintended side effects.

It ensures that running the IaC code multiple times won't cause unnecessary changes or errors. Idempotency allows for safe and reliable infrastructure updates and helps maintain the desired state of resources.

Q14. Have you experienced any challenges or pitfalls while implementing infrastructure as code? How did you handle them?

A14 example:

"Yes, challenges can include managing dependencies, handling secrets securely, and dealing with complex infrastructure setups.

To address them, I adopt modular code structures, utilize parameterization and variable management, leverage secure credential management systems, and create comprehensive and reusable templates.

Regular testing, peer reviews, and automation of testing and deployment processes also contribute to overcoming challenges effectively."

Q15. How do you ensure the security and compliance of infrastructure deployed through IaC

A15 example:

"To ensure the security and compliance of infrastructure deployed through IaC, I incorporate security best practices into the IaC code, such as utilizing secure configurations, implementing proper access controls, and encrypting sensitive data.

I regularly review and update the infrastructure code, perform vulnerability scanning, and leverage compliance-as-code frameworks to enforce security and compliance policies."

Cloud Computing

In the context of DevOps interview questions and answers, "cloud computing" refers to the practice of utilizing remote servers, networks, and services over the Internet to store, manage, and process data, rather than relying solely on local infrastructure.

Cloud computing enables organizations to leverage scalable and on-demand resources provided by cloud service providers, such as Amazon Web Services (AWS), Microsoft Azure, or Google Cloud Platform (GCP). This approach offers several advantages for DevOps, including increased flexibility, scalability, cost-efficiency, and access to a wide range of managed services and tools.

Cloud computing in DevOps allows for rapid provisioning of infrastructure, seamless integration with deployment pipelines, and the ability to automate and orchestrate the entire software delivery process.

Q16. What are the benefits and drawbacks of migrating applications to the cloud?

A16. Migrating applications to the cloud offers benefits like scalability, flexibility, cost-efficiency, and access to a wide range of managed services. It enables rapid provisioning, global availability, and easy integration with other cloud-based services.

However, drawbacks can include vendor lock-in, potential security concerns, data sovereignty issues, and dependency on internet connectivity.

Q17. Which cloud platforms have you worked with, and what services or tools did you utilize?

A17. example:

"I have experience working with major cloud platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). I have utilized services such as AWS Elastic Compute Cloud (EC2), AWS Lambda, Azure App Service, Google Kubernetes Engine (GKE), and various managed database services. These platforms offer a wide array of services for infrastructure provisioning, storage, computing, networking, and more."

Q18. Can you describe the process of scaling infrastructure in a cloud environment?

A18. Scaling infrastructure in a cloud environment typically involves horizontal or vertical scaling. Horizontal scaling, also known as scaling out, involves adding more instances of resources, such as virtual machines or containers, to distribute the workload.

Vertical scaling, or scaling up, involves increasing the capacity of existing resources, such as upgrading the CPU or memory of a virtual machine.

Cloud platforms offer autoscaling capabilities that automatically adjust resource capacity based on predefined conditions, ensuring optimal performance and cost efficiency.

Q19. Have you implemented any cost optimization strategies for cloud-based infrastructure? If so, what were they?

A19. example:

"Yes, I have implemented several cost optimization strategies for cloud-based infrastructure. These include rightsizing instances, choosing the appropriate pricing models (e.g., reserved instances or spot instances), implementing scheduling policies to shut down non-production resources during off-hours, and using serverless architectures where applicable.

I also regularly monitor and analyze usage patterns, leverage cost management tools provided by cloud platforms, and optimize storage and data transfer costs."

Q20. How do you ensure high availability and fault tolerance in a cloud-based architecture?

A20. High availability and fault tolerance in a cloud-based architecture can be achieved through various practices. This includes distributing applications across multiple availability zones or regions, leveraging load balancing and auto-scaling capabilities, implementing automated failover mechanisms, and regularly backing up data to ensure data durability.

Additionally, using managed services that offer built-in redundancy and fault tolerance, such as managed databases or serverless computing, can contribute to high availability.

Monitoring And Observability

Monitoring and Observability, in the context of DevOps, refer to practices and tools used to gain insights into the health, performance, and behavior of software systems.

Monitoring involves collecting and analyzing metrics, logs, and events to track the system's state, identify issues, and ensure its stability.

Observability goes beyond monitoring by providing a holistic understanding of the system's internal workings, allowing for in-depth analysis, troubleshooting, and root cause identification.

Both monitoring and observability play crucial roles in maintaining the reliability, performance, and availability of applications in a DevOps environment.

Q21. What is the difference between monitoring and observability in a DevOps context?

A21. Monitoring typically refers to the process of collecting and analyzing metrics and logs from various components of a system to assess its health and performance. On the other hand, observability focuses on gaining insights into the system's internal state and behavior through metrics, logs, and distributed tracing, allowing for a deeper understanding of complex systems. Observability aims to provide a holistic view and facilitate troubleshooting and root cause analysis.

Q22. Which monitoring and observability tools or frameworks have you used, and what were their key features?

A22. I have experience working with tools like Prometheus, Grafana, Elasticsearch, Kibana, and Jaeger. Prometheus is a popular monitoring system with powerful query and alerting capabilities. Grafana is a visualization tool that integrates with Prometheus and other data sources. Elasticsearch and Kibana form the ELK stack for log analysis and visualization. Jaeger is a distributed tracing system. These tools provide metrics, logs, and tracing functionalities, allowing for effective monitoring and observability.

Q23. How do you handle and analyze application logs and metrics to identify performance issues or bottlenecks?

A23. To handle and analyze application logs and metrics, I centralize logs using tools like Elasticsearch and configure log aggregation to gain a unified view. Metrics are collected using monitoring agents or exporters and stored in a time-series database like Prometheus. I leverage visualization tools like Grafana to create dashboards and set up alerts based on predefined thresholds. Analyzing logs and metrics helps identify performance issues, troubleshoot bottlenecks, and optimize system behavior.

Q24. Have you implemented any alerting or notification systems for proactive monitoring? How did you set them up?

A24. Yes, I have implemented alerting and notification systems to proactively monitor infrastructure and applications. Using monitoring tools like Prometheus or cloud provider-specific services, I define alerting rules based on specific metrics or log patterns. These rules trigger notifications via email, chat platforms, or integrations with incident management systems like PagerDuty or OpsGenie. I ensure that alerting thresholds are carefully defined to avoid unnecessary noise while providing timely alerts for critical events.

Q25. Can you explain the concept of distributed tracing and its significance in a microservices architecture?

A25. Distributed tracing is a method of tracking and visualizing requests as they flow through a complex system, typically in a microservices architecture. It captures timing and contextual information across different services and provides insights into the end-to-end flow of a request, helping identify performance bottlenecks and troubleshoot issues. Distributed tracing allows for understanding dependencies and interactions between services, aiding in optimizing performance, and improving overall system reliability.

I hope you found the DevOps interview questions and answers above useful!

We know that finding the right DevOps specialist could be a challenge. But for us, it's a day-to-day demand from our clients. Contact us, request an offer NOW and we'll help you make sure you're not left without a senior devops professional! Hire DevOps professionals from Bluebird!

To get to know more about DevOps, check out our blog post about DevOps tools!

To be the first to know about our latest blog posts, follow us on LinkedIn and Facebook!